Medium-term interoperability challenges

[Written in consultation with the Interoperability Working Group chairs]

In our last essay, we explored the means and ends of interoperability targets and roadmapping across stacks and markets. “Interoperability,” like “standardization,” can be a general-purpose tool or an umbrella of concepts, but rarely works from the top-down. Instead, specific use-cases, consortia, contexts, and industries have to take the lead and prototype something more humble and specific like an “interoperability profile”-- over time, these propagate, get extended, get generalized, and congeal into a more universal and stable standard. Now, we’ll move on to some technical problem areas ripe for this kind of profile-first interoperability work across stacks.

What makes sense to start aligning now? What are some sensible scopes for interoperating today, or yesterday, to get our fledgling market to maturity and stability as soon as safely possible?

From testable goals to discrete scopes

The last few months have seen a shift in terminology and approach, as many groups turn their attention from broad “interoperability testing” to more focused “profiles” that test one subset of the optionalities and capabilities in a larger test suite or protocol definition. This decoupling of test suites from multiple profiles each suite can test helps any one profile from ossifying into a “universal” definition of decentralized identity’s core featureset.

As with any other emerging software field, every use case and context has its own interoperability priorities and constraints that narrow down the technological solution space into a manageable set of tradeoffs and decisions. For instance, end-user identification at various levels of assurance is often the most important implementation detail for, say, a retail bank, and DID-interoperability (which is not always the same thing!) might be a hardware manufacturer’s primary concern in being able to secure hardware supply chains.

Every industry has its unique set of minimum security guarantees, and VC-interoperability is obviously front-of-mind for credentialing use-cases. For example, in the medical data space, “Semantics” (data interpretation and metadata) might be a harder problem (or a more political one) than the mechanics of identity assurance, since high standards of end-user privacy and identity assurance have already made for a relatively interoperable starting points. Which exact subset of the many possible interoperability roadmaps is safest or most mission-critical for a given organization depends on many factors: the regulatory context, the culture of the relevant sectors, its incentive-structures, and its business models.

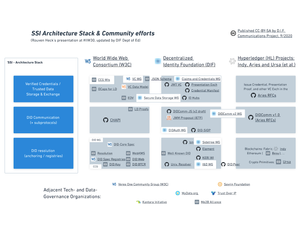

Cross-cutting industry verticals, however, are structural issues with how decentralized identity stacks and architecture vary, which can already been seen today. By applying “first principles,” (or in our case, the “functions” of a decentralized identity system and its many moving parts) across use-cases and industrial contexts, certain shared problems arise. As is our default approach in DIF Interop WG, we applied DIF’s in-house mental model of the “5 layers” of decentralized identity systems, sometimes called “the 4+1 layers”. (See also the more detailed version).

We call these the “4+1” layers because our group agreed that a strict layering was not possible, and that all the architectures we compared for using verifiable credentials and decentralized identifiers had to make major architectural decisions with consequences across all four of the more properly “layered” categories. This fifth category we named “transversal considerations,” since they traverse the layers and often come from architectural constraints imposed by regulation, industrial context, etc. Foremost among these are storage and authorization, the two most vexing and cross-cutting problems in software generally; these would justify an entirely separate article.

In a sense, none of the topics from this transversal category are good candidates for specification in the medium-term across verticals and communities-- they are simply too big as problems, rarely specific to identity issues, and being addressed elsewhere. These include “storage” (subject of our newest working group), “authorization” (debatably the core problem of all computer science!), “cryptographic primitives”, and “compliance.” (These last two are each the subject of a new working group, Applied Cryptography and Wallet Security!). These interoperability scopes are quite difficult to tackle quickly, or without a strong standards background. Indeed, these kinds of foundational changes require incremental advances and broad cooperation with large community organizations. This is slow, foundation work that needs to connect parallel work across data governance, authentication/authorization, and storage in software more generally.

Similarly, the fourth layer, where ecosystem-, platform-, and industry-specific considerations constrain application design and business models, is unlikely to crystallize into a problem space calling out for a single specification or prototype in the medium-term future. Here, markets are splintered and it is unclear what can be repurposed or recycled outside of its original context. Even if there were cases where specification at this later would be timely, DIF members might well choose to discuss those kinds of governance issues at our sister-organizations in the space that more centrally address data governance at industry-, ecosystem-, or national- scale: Trust over IP was chartered to design large-scale governance processes, and older organizations like MyData.org and the Kantara Initiative also have working groups and publications on vertical-specific and jurisdiction-specific best practices for picking interoperable protocols and data formats.

That still leaves three “layers”, each of which has its own interoperability challenges that seem most urgent. It is our contention that each of these could be worked on in parallel and independently of the other two to help arrive at a more interoperable community-- and we will be trying to book presentation and discussion guests in the coming months to advance all three.

Scope #1: Verifiable Credential Exchange

The most clear and consensus-building, even urgent way forward is to bring clarity to Verifiable Credential exchange across stacks. This has been the primary focus of our WG for the last year. Given that most of the early ecosystem-scale interest in SSI revolves around credentialing (educational credentials, employment credentials, health records), it is highly strategic to get translation and unified protocols into place soon for the interoperable verification and cross-issuance of credentials.

In fact, there has actually been a lot of good progress made since Daniel Hardman wrote an essay on the Evernym blog making a pragmatic case for sidestepping differences in architecture and approach to exchange VCs sooner. This aligned with much of our group’s work in recent months, which has included a survey of VC formats among organizations producing “wallets” for verifiable credentials (be they “edge” wallets or otherwise). Our group has also sought to assist educational efforts at the CCG, in the Claims and Credentials working group, and elsewhere to make wallet-producing organizations aware of the relevant specifications and other references needed to make their wallets multi-format sooner and less painfully. Much of this work was crystalized into an article by co-chair Kaliya Young and crowd-edited by the whole group; this work was a major guiding structure for the Good Health Pass work that sought to make a common health record format (exported from FHIR systems) equally holdable and presentable across all of today’s VC systems.

One outgrowth of this effort and other alignments that have taken place since Hardman’s and Young’s article is the work of prototyping a multi-community exchange protocol that allow a subset of each stack’s capabilities and modes to interoperate. This tentative, “minimum viable profile” is called WACI-PEx and is currently a work item of the Claims and Credentials working group. Work is ongoing on v0.1, and an ambitious, more fully-featured v1 is planned for after that. This profile acts as an “extension” of the broader Presentation Exchange protocol, giving a handy “cheat sheet” for cross-stack wallet-to-issuer/verifier handshakes so that developers not familiar with all the stacks and protocols being spanned have a starting point for VC exchanges. Crucially, the results of this collaborative prototype will be taken as inputs to future versions of the DIDComm protocols and the WACI specification for Presentation Exchange.

Note: There has been some discussion of a OIDC-Presentation Exchange profile at some point in the future, but given that the alignment of DIDComm and Presentation Exchange started over a year ago, the most likely outcome is that work on this would not start until after v1 has been released of the “WACI-PEx” profile for DIDComm has been released.

Scope #2: Anchoring layer

Of course, other forms of alignment are possible as well in the “bottom 3” layers of the traditional stack, while we wait on the ambitious transversal alignment specifications and the ongoing work to align and simplify cross-format support for VCs (and perhaps even multi-format VCs, as Hardman points out in the essay above).

The Identifiers and Discovery WG at DIF has long housed many work items to align on the lowest level of stack, including general-purpose common libraries and recovery mechanisms. It has also received many donations that contribute to method-level alignment, including a recent DID-Key implementation and a linked-data document loader donated by Transmute. The group has also served as a friendly gathering point for discussing calls for input from the DID-core working group at W3C and for proposing W3C-CCG work items.

One particularly noteworthy initiative of the group has been the Universal Resolver project, which offers a kind of “trusted middleware” approach to DID resolution across methods. This prototype of a general-use server allows any SSI system (or non-SSI system) to submit a DID and get back a trustworthy DID Document, without needing any knowledge of or access to (much less current knowledge of or authenticated access to) the “black box” of the participating DID methods. While this project only extends “passive interoperability” to DIDs, i.e., only allowing DID document querying, a more ambitious sister project, the Universal Registrar, strives to bring a core set of CRUD capabilities to DID documents for DID methods willing to contribute drivers. Both projects have dedicated weekly calls on the DIF calendar, for people looking to submit drivers or get otherwise involved.

Scope #3: “Agent”/Infrastructure Layer

There is another layer, however, in between DIDs and VCs, about which we haven’t spoken yet: the crucial “agent layer” in the ToIP/Aries mental model, which encompasses trusted infrastructure whether in or outside of conventional clouds. The Aries Project has scaled up an impressively mature ecosystem of companies and experimenters, largely thanks to the robust infrastructure layer it built (and abstracting away from application-layer developers and experimenters).

Until now, differences of strategy with respect to conventional clouds and infrastructures have prevented large-scale cooperation and standardization at this layer outside of Aries and the DID-Comm project. Partly, this has been a natural outgrowth of the drastically different infrastructural needs and assumptions of non-human, enterprise, and consumer-facing/individual use cases, which differ more at this level than above or below. Partly, this is a function of the economics of our sector’s short history, largely influenced by the infrastructure strategies of cloud providers and telecommunication concerns.

This is starting to change, however, now that agent frameworks inspired by the precedent set by the Aries frameworks have come into maturity. Mattr’s VIII Platform, the Affinidi framework SDK (including open-source components by DIF members Bloom, Transmute, and Jolocom), ConsenSys’ own modular, highly extensible and interoperable Veramo platform, and most recently Spruce ID’s very DID-method-agnostic DIDKit/Credible SDK all offer open-source, extensible, and scalable infrastructure layers that are driving the space towards greater modularity and alignment at this layer.

As these platforms and “end-to-end stacks” evolve into frameworks extensible and capacious enough to house ecosystems, DIF expects alignment and harmonization to develop. This could mean standardization of specific components at this layer, for example:

- The DIDComm protocol could expand into new envelopes and transports

- Control recovery mechanisms could be specified across implementations or even standardized on a technical and/or UX level

- Auditing or historical-query requirements could be specified to form a cross-framework protocol or primitive

- Common usages of foreign function interfaces, remote procedure calls like gRPC and JSON-RPC, or other forms of “glue” allowing elements to be reused or mixed and matched across languages and architectures could be specified as a community

We are very much in early days, but some see on the horizon a day when frameworks that don’t cooperate with one another can’t compete with the ones that join forces. After all, adoption brings growing pains, particularly for the labor market-- aligning on architectures and frameworks makes onboarding developers and transferring experience that much easier to do!

Next Steps

I would encourage anyone who has read this far to pick at least one of the three scopes mentioned above and ask themselves how they are helping along this alignment process in their day-to-day work, and if they truly understand what major players at that level are doing. Often large for-profit companies pay the most attention to what their competitors are doing, but here it is important to think outside of competition and look instead at non-profit organizations, regulators, and coalitions of various kinds to really see where the puck is heading. Sometimes consensus on one level is blocking compromise somewhere else. It can be pretty hard to follow!

In recent articles, DIF has encouraged its members to think about an open-source strategy as comparably important to a business plan, a living document and guiding philosophy. I would like to suggest that the subset of DIF companies working with VCs and DIDs should also think of interoperability strategy and conformance testing as the most crucial pillar of that strategy-- if you cannot demonstrate interoperability, you might be asking people to take that strategy on faith!